Music and its Effect on Body, Brain/Mind:A Study on Indian Perspective by Neurophysical Approach

Archi Banerjee, Shankha Sanyal, Ranjan Sengupta,Dipak Ghosh

Archi Banerjee*, Shankha Sanyal, Ranjan Sengupta, Dipak Ghosh

Sir CV Raman Centre for Physics and Music, Jadavpur University, Kolkata

- Corresponding Author:

- Archi Banerjee

Sir CV Raman Centre for Physics and Music

Jadavpur University, Kolkata 700032

Tel: +919038569341

E-mail: archibanerjee7@gmail.com

Received Date: September 20, 2015, Accepted Date: September 22, 2015, Published Date: September 29, 2015

Citation: Banerjee A, Sanyal S, Sengupta R, et al. Music and its Effect on Body, Brain/Mind: A Study on Indian Perspective by Neuro-physical Approach. Insights Blood Press 2015, 1:1.

Keywords

Music Cognition, Music Therapy, Diabetes, Blood Pressure, Neurocognitive Benefits

Music from the Beginning

The singing of the birds, the sounds of the endless waves of the sea, the magical sounds of drops of rain falling on a tin roof, the murmur of trees, songs, the beautiful sounds produced by strumming the strings of musical instruments–these are all music. Some are produced by nature while others are produced by man. Natural sounds existed before human beings appeared on earth. Was it music then or was it just mere sounds? Without an appreciative mind, these sounds are meaningless. So music has meaning and music needs a mind to appreciate it.

Music therefore may be defined as a form of auditory communication between the producer and the receiver. There are other forms of auditory communication, like speech, but the difference is that music is more universal and evokes emotion. It is also relative and subjective. What is music to one person may be noise to another.

The material essence of music lies in its melody, harmony, rhythm, and dynamics. Melody gives music soul, while rhythm blends the expression of harmony and dynamics with the tempo of the passage. All of these are necessary to create a recognizable pattern known as a "song."

“Rhythm”, by its simplest definition reflects the dynamics of musical time. The origin of the perception of rhythm can be traced back to the heartbeat that a child receives in the womb. The socio-behavioural impact of rhythm is said to be manifested in dance designed to boost our energy levels in order to cope with a fight or flight response. It can be said that perceiving rhythm is the ability to master the otherwise invisible dimension, time.

How Physics is Related to Music

Scientific research on music has been initiated in ancient times. In Greece, Pythagoras, in India, Bharat a speculated on the rational and scientific basis of music to elucidate its fundamental structures. Music was first given numbers (the simple ratios of octave, perfect fifth and perfect fourth) by Archimedes. The works of eminent scientists like Pythagorus, Helmholtz in the past and Raman, Kar followed by Rossing and Sundberg later on, threw a flood of light on many scientific aspects of music.

Modern science has made an entrance in understanding these through the Physics of voice production and theory of articulation and perception. Technological developments have enabled us to analyse these areas precisely.

In India, Sir C V Raman, working at Kolkata did some pioneer research [1] on Indian musical instruments. Raman worked on the acoustics of musical instruments from 1909 to 1935 and regularly published his research work on musical instruments in reputed journal like Nature (London), journal Dept. of science, University of Calcutta, Philosophical magazine, Indian association for cultivation of science etc. and also in various proceedings of national and international repute. He worked out the theory of transverse vibration of bowed strings, on the basis of superposition velocities. He was also the first to investigate the harmonic nature of the sound of the Indian drums such as the tabla and the mridangam [2]. He had some pioneer work on violin family and ektara. “On the wolf note of the violin and cello”, “The kinematics of bowed string”, “The musical instruments and their tones”, “Musical drums with harmonic overtones” etc are some examples of his published work. Raman was fascinated by waves and sounds and is always carried in his mind the memory of reading Helmholtz’s book on ‘The Sensations of Tone’ in his school days. His work on musical instruments is the biggest motivation of research in the area. After Sir C V Raman, research work on musical instruments was carried forward by S Kumar, K C Kar, B S Ramakrishnan and B M Banerji during mid of nineteenth century. After that there was a big void in the research of Indian musical instrument sound.

Though, in the west, a lot of works on the production, analysis, synthesis, composition and perception of western music has been reported, systematic investigation in these directions is yet to make its mark in the area of Indian Music. Since music is a temporal art, its proper study necessarily includes a method of capturing, representing and interpreting information about successive moments of time.

Some excellent and rigorous research work in the area of music was done in the ITC Sangeet Research Academy from 1983 to 2010. Presently cohesive, broad-based and planned research effort in this direction is being spearheaded by Sir C V Raman Centre of Physics and Music, Jadavpur University from 2005 and also sporadic researches by some individuals in various research organizations.

In the early days of modern science, before the advent of Artificial Intelligence, the scientific research on music remained primarily involved in physics of sound and vibration. Some of the examples are the mathematical analysis of string, wind and percussion instruments. The cognitive and experiential aspects of music were not taken much note of. The emergence of Artificial Intelligence appears to be co-incidental with the taking up of cognitive aspects of music into the ambit of modern scientific research. A scientific understanding of music must begin by taking into account how minds act in the ambience of music. Music like speech is also a mode of communication between human beings. The communicator endeavors to communicate certain messages, be it mood, feelings, expression and the like. Through this he creates a story, a sort of ambience for the audience. In a sense music appears to be a more fundamental and universal phenomena than speech. In speech communication the listener has to know the language of the speaker to get the message. In creating music an artist produces an objective material called sound. The semiotics in music consists of lexicon (chalan, pakad), syntax (raga), pragmatics (thaat, gharana) and semantics (mood, feeling, emotion). Thus if science has to probe music it has to take into account these semiotics, the cognitive processes taking place in the mind along with the acoustics of it. As in the case of a language, the semiotics here also is completely languagedependent. This needs to be borne in mind when one takes up a particular music for study. A comprehensive scientific approach therefore needs to address the physical users. It appears that the public reposes faith in the modern scientific approaches.

How Music affects our rains

Music seems to be present in all cultures, and it appears to be very deep-rooted in the human psyche. We know that music is a perceptual entity and is controlled by our auditory mechanisms. Music affects emotions and mood. It makes people want to dance. It is intensely connected to memories. People can remember song lyrics from decades ago without any effort. The emotional content of music is very subjective. A piece of music may be undeniably emotionally powerful, and at the same time be experienced in very different ways by each person who hears it.

Music engages much of the brain, and coordinates a wide range of processing mechanisms. This naturally invites consideration of how music cognition might relate to other complex cognitive abilities. The tremendous ability that music has to affect and manipulate emotions and the brain is undeniable, and yet largely inexplicable. Very little serious research had gone into the mechanism behind music's ability to physically influence the brain and even now the knowledge about the neurological effects of music is scarce.

How we Study the effects of Music on Human Brain from Neuro-physics Approach

The human brain, which is one of the most complex organic systems, involves billions of interacting physiological and chemical processes that give rise to experimentally observed neuro-electrical activity, which is called an electroencephalogram (EEG). Music can be regarded as input to the brain system which influences the human mentality along with time. Since music cognition has many emotional aspects, it is expected that EEG recorded during music listening may reflect the electrical activities of brain regions related to those emotional aspects. The results might reflect the level of consciousness and the brain's activated area during music listening. It is anticipated that this approach will provide a new perspective on cognitive musicology.

Music is widely accepted to produce changes in affective (emotional) states in the listener. However, the exact nature of the emotional response to music is an open question and it is not immediately clear that induced emotional responses to music would have the same neural correlates as those observed in response to emotions induced by other modalities. However, although there is an emerging picture of the relationship between induced emotions and brain activity, there is a need for further refinement and exploration of neural correlates of emotional responses induced by music.

Music in India has great potential in this study because Indian music is melodic and has somewhat different pitch perception mechanisms. Western classical music which is based on harmonic relation [3] between notes versus the melodic mode (raaga) structures in the Indian Classical Music System (ICM) within the rhythmic cycle music may demand qualitatively different cognitive engagement. The analysis of EEG data to determine the relation between the brain state condition in the presence of ICM and its absence would therefore be an interesting study. How rhythm, pitch, loudness etc. interrelate to influence our appreciation of the emotional content of music might be another important area of study. This might decipher a technique to monitor the course of activation in the time domain in a three-dimensional state space, revealing patterns of global dynamical states of the brain. It might also be interesting to see whether the arousal activities remain after removal of music stimuli.

Research on the Effect of Music on the Production of Neurotransmitters, Hormones, Cytokines, and Peptides

• Music is widely regarded as a means of enjoyment and entertainment. However, music has also been used toward improving the well-being of patients. While the brain interprets music, successive biochemical reactions are induced within the body. Evidence indicates that music plays a role in activating pleasure-seeking areas of the brain that become stimulated by food, sex, and drugs.

• Biological effects of music has mostly been studied with the Heart Rate variability (HRV) and blood pressure, which look to explain the cognitive background of musical appreciation, stress relief as well as emotion identification. The messengers within the body which carry the signals vary from small to large molecules which are vital for physiological health.

• Music of different genres and musical pattern lead to the production of distinct type of messengers in the body. This has been reported in a number of earlier studies. While the music of Johann Strauss induced enhancement in atrial filling fraction and atrial natriuretic peptide and decrement in cortisol and tissue-type plasminogen activator (t-PA), Ravi Shankar’s music resulted in lowered concentrations of cortisol, noradrenaline, and t-PA [4- 6]. Listening to techno music was found to alter levels of β-endorphin, adrenocorticotropic hormone (ACTH), norepinephrine, growth hormone, prolactin, and cortisol in healthy people [7,8]. Critically ill patients who listened to Mozart’s slow piano sonatas had increased growth hormone and decreased interleukin (IL) levels [9]. Appreciation of a mixed selection of rock music increased salivary immunoglobulin A (IgA) [10].The effects of major and minor modes and intensity of music on cortisol production have also been studied [11].

• Music also is known to aid in fighting cerebrovascular disease by activation of parasympathetic nervous system, lowering concentrations of IL-6, tumor necrosis factor (TNF), adrenaline, and noradrenaline. Adrenocorticotropic hormone, cortisol, adrenaline, and noradrenaline also have been measured before and after gastroscopy. Biochemical messenger production has been found influential in providing a calming effect in elderly patients with Alzheimer dementia. Music has proven effective in improving the immune function.

• Exploring the exact functions of cytokines, neurotransmitters, hormones, peptides, and other messengers requires further research. While exposure to music may reveal such functions through trends in messenger production, they are not by any means causative. Complexity is apparent when discussing the aggregative effects of the messengers. A trend in the production of a particular messenger may be offset or amplified by the potency of another messenger. Thus, pathways of messenger production prove crucial to understanding the connections between the mind and the body. One study has shown that music may balance messenger levels by increasing and decreasing steroids in those with low and high hormone levels, respectively. Further research on the link between messenger outputs and physiological homeostasis remains to be determined.

• Research on music therapy has shown that it can decrease pain and anxiety in critical care patients. Music has demonstrated effectiveness in reducing pain, decreasing anxiety, and increasing relaxation. In addition, music has been used as a process to distract persons from unpleasant sensations and empower them with the ability to heal from within [12,13]. Music is an effective adjunct to a pharmacologic antiemetic regimen for lessening nausea and vomiting, and this study merits further investigation through a larger multi-institutional effort [14]. Listening to music under general anesthesia did not reduce preoperative stress hormone release or opioid consumption in patients undergoing gynecological surgery [15].

Review of Status of Research and Development in the Subject

International status

Research in the area of Music, Brain, & Cognition aims to shed light on some of the key issues in current and future music research and technology. Cognitive musicology was envisaged by Seifert et al. [16] and Leman et al. [17] to be composed from diverse disciplines such as brain research and artificial intelligence striving for a more scientific understanding of the phenomenon of music. In recent years, computational neuroscience has attracted great aspirations, exemplified by the silicon retina [18] and the ambitious Blue Brain Project that aims at revolutionising computers by replacing their microcircuits by models of neocortical columns [19]. Research activity in auditory neuroscience, applied to music in particular, is catching up with the scientific advances in vision research. Shamma et al. [20] proposed that the same neural processing takes place for the visual as well as for the auditory domain. Other researchers suggested biologically inspired models specific to the auditory domain; e.g., Smith and Lewicki [21] decomposed musical signals into gammatone functions that resemble the impulse response of the basilar membrane measured in cats.

The fast advancement of the brain computer interface [22] and brain-imaging methodology such as the electroencephalogram has further encouraged music research. Brain imaging grants access to music-related brain processes directly rather than circuitously via psychological experiments and verbal feedback by the subjects. A lot of experimental work in auditory neuroscience has been performed, in particular exploring the innate components of music abilities. In developmental studies of music, magnetoencephalograms have been used to study fetal music perception [23]. Mismatch negativity in newborns has shown how babies discriminate pitch, timbre, and rhythm [24]. A summary of electroencephalogram research in music leads Koelsch and Siebel [25] to a physiologically inspired model composed of modules, e.g., for gestalt formation and structure building where the special features of the model are the feedback connections enabling structural reanalysis and repair.

We may assume a functional and physiological separation between sequential processing (related to musical syntax and grammar), Broca's area [26,27], and the processing of timing information, right temporal auditory cortex and superior temporal gyrus [28]. Sequential processing can be seen as statistical learning [29], in this case, learning Nth order transition sequences. The idea of statistical learning has been anticipated by Leibniz et al. [30]. Music is the hidden mathematical endeavour of a soul unconscious it is calculating'. On the other hand, timing information is closely related to movement planning and kinematics. The relation between timing aspects of music and movement is emphasised by the concept of mirror neurons. A mirror neuron would not only be active when the individual is articulating themselves vocally but also when the individual is observing or listening to another individual articulating a communicative sound. Prather, Peters, Nowicki, and Mooney [31] have found neurons in the sparrow's forebrain that establish an auditory-vocal correspondence. Models of musical timing are often based on oscillators, Fourier transform, or autocorrelation [32-34].

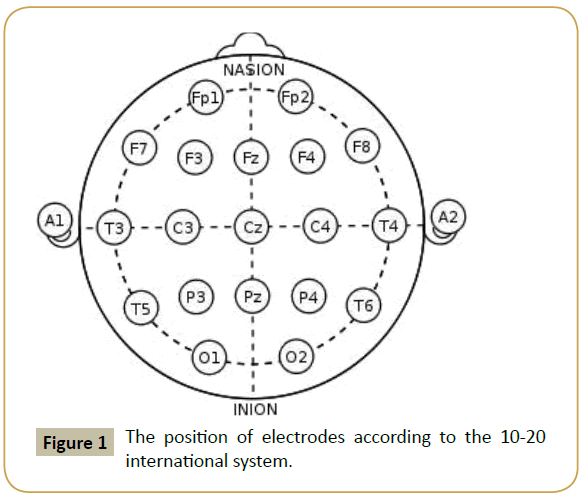

EEG has been used in cognitive neuroscience to investigate the regulation and processing of emotion for the past decades. Figure 1 shows the positioning of various EEG electrodes according to the 10-20 international system. Linear signal analysis methods such as Power Spectral Density (PSD) of alpha, theta and gamma EEG frequency rhythms have been used as an indicator to assess musical emotions [35-37]. Asymmetry in alpha or theta power among the different regions of brain has been used in a number of studies as an emotion identification algorithm [38-40]. Though the frontal lobe has been proven to the most vital when it comes to the processing of musical emotions in healthy as well as depressed individuals [41], there are other lobes also which has been identified to be associated with emotional responses. In [42, 43] it was shown that the alpha-power changes at right parietal lobe, while the theta-power changes at right parietal lobe [44], pleasant music produces an increase in the frontal midline (Fm) theta power [45], while degrees of the gamma band synchrony over distributed cortical areas were found to be significantly higher in musicians than non musicians [46]. Human being interacts with music both consciously and unconsciously at behavioral, emotional and physiological level. Listening to music and appreciating it is a complex process that involves memory, learning and emotions, Music is remarkable for its ability to manipulate emotions in listeners. However, the exact way in which the brain processes music is still a mystery and is known to be associated with several brain oscillations in combinations [47]. It is therefore important to explore how specific features of music trigger neurophysiological, psychophysiological, emotional and behavioral response. Using neuro-bio sensors such as EEG/ ECG/GSR to assess a variety of emotional states induced by music is still in its infancy, compared to the works in the psychological domain using various audio–visual based methods.

Ishino and Hagiwara [48] took the help of neural networking technique to develop a system where subjective feelings of respondents were utilized to categorize emotional states based on EEG features. Takahashi [49] proposed an emotion-recognition system combining EEG, pulse rate and skin conductivity as biosensors. They used the Support Vector Machine (SVM) algorithm to classify emotions and obtained decent accuracy rate for five emotional states. Another EEG based work by Chanel et al. [50] reported an emotion assessment algorithm with high accuracy for three categories of emotion. They performed the classification using the Na¨ive Bayes classifier applied to EEG characteristics derived from specific frequency bands. Heraz et al. [51] established an agent to predict emotional states during learning. The best classification in the study was an accuracy of 82.27% for distinguishing eight emotional states, using k-nearest neighbors as a classifier and the amplitudes of four EEG components as features. Chanel et al. [52] reported an average accuracy of 63% by using EEG time-frequency information as features and SVM as a classifier to characterize EEG signals into three emotional states. Ko et al. [53] demonstrated the feasibility of using EEG relative power changes and Bayesian network to predict the possibility of user’s emotional states. Also, Zhang and Lee [54] proposed an emotion understanding system that classified users’ status into two emotional states with the accuracy of 73.0% ± 0.33% during image viewing. The system employed asymmetrical characteristics at the frontal lobe as features and SVM as a classifier. However, most of works focused only on EEG spectral power changes in few specific frequency bands or at specific scalp locations. No study has yet been conducted to systematically explore the correspondence between emotional states and EEG spectral changes across the whole brain and relate the findings to those previously reported in emotion literature. Figure 1

It was found that the EEG signals respond differently to different types of music. The nature of EEG and its effect on the brain due to the different types of music was studied in [32]. They have shown that, the frequency of EEG signal changes for different types of music. The middle abdomen area in the pon’s side of the brain was focused for this study. Bhattacharya et al have presented a phase synchrony analysis of EEG in five standard frequency bands: delta (<4 Hz), theta (4–8 Hz), alpha (8–13 Hz), beta (13– 30 Hz), and gamma (>30 Hz) [33]. The analysis was done using indices like coherence and correlation in two groups of musicians and non musicians. They observed a higher degree of gamma band synchrony in musicians. Frequency distribution analysis and the independent component analysis (ICA) were used to analyze the EEG responses of subjects for different musical stimuli. It was shown that some of these EEG features were unique for different musical signal stimuli [34].

Lin et al. [35] used independent component analysis (ICA) to systematically assess spatio-spectral EEG dynamics associated with the changes of musical mode and tempo. The results showed that music with major mode augmented delta-band activity over the right sensorimotor cortex, suppressed theta activity over the superior parietal cortex, and moderately suppressed beta activity over the medial frontal cortex, compared to minor-mode music, whereas fast-tempo music engaged significant alpha suppression over the right sensorimotor cortex.

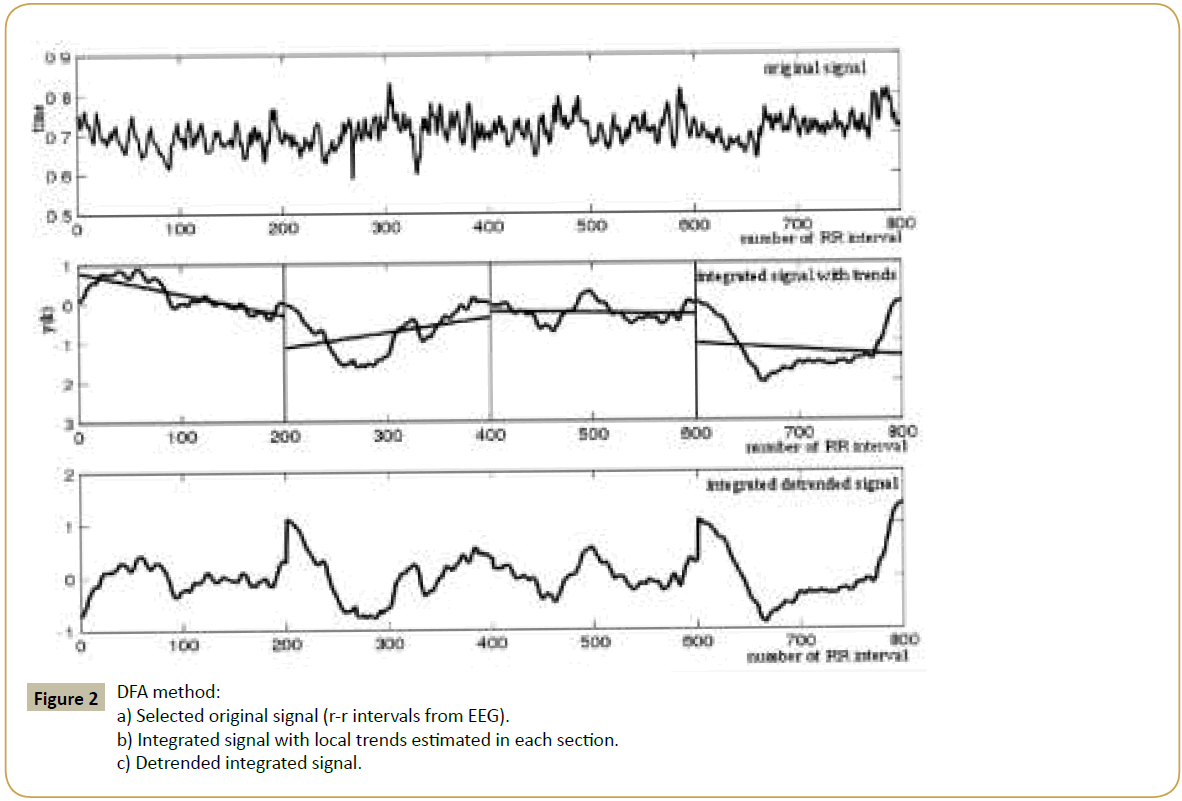

Use of non linear parameters to determine the music induced emotional states from EEG data is very scarce in literature. Natarajan et al. [36] used nonlinear parameters like Correlation Dimension (CD), Largest Lyapunov Exponent (LLE), Hurst Exponent (H) and Approximate Entropy (ApEn) are evaluated from the EEG signals under different mental states. The results obtained show that EEG to become less complex relative to the normal state with a confidence level of more than 85% due to stimulation. It is found that the measures are significantly lower when the subjects are under sound or reflexologic stimulation as compared to the normal state. The dimension increases with the degree of the cognitive activity. This suggests that when the subjects are under sound or reflexologic stimuli, the number of parallel functional processes active in the brain is less and the brain goes to a more relaxed state. Gao et al. [37] was the first to apply the scaling technique called Detrended Fluctuation Analysis (DFA) on EEG signals to assess the emotional intensity induced by different musical clips. In this study two scaling exponents’ β1 and β2 was obtained corresponding to high and low alpha band. It was concluded that emotional intensity was inversely proportional to β1 and directly proportional to β2.

National status

Despite the world’s diversity of musical cultures, the majority of research in cognitive psychology and the cognitive neuroscience of music have been conducted on subjects and stimuli from Western music cultures. From the standpoint of cognitive neuroscience, identification of fundamental cognitive and neurological processes associated with music requires ascertaining that such processes are demonstrated by listeners from various cultural backgrounds and music across cultural traditions. It is unlikely that individuals from different cultural backgrounds employ different cognitive systems in the processing of musical information. It is more likely that different systems of music make different cognitive demands.

Western classical music which is based on harmonic relation between notes versus the melodic mode (raaga) structures in the Indian classical music system (ICM) within the rhythmic cycle music may demand qualitatively different cognitive engagement. To elaborate this point further, ICM music is monophonic or quasi monophonic. Unlike the western classical system, there is no special notation system for music. Instead letters from the colloquial languages are used to write music. For instance notations of the ICM such as ‘Sa, Re, Ga” may be written in Hindi, Kannada or Tamil where as the Western classical system music includes a unique visuo-spatial representation. It emphasizes on reading the exact position of symbol indicating a whole tone or a semitone on the treble or a bass clef. The scale systems (Raagas) are quite elaborate and complex provides a strict framework within which the artist is expected bring out maximum creativity. Although specific emotion (rasa) is associated with particular raaga, it is well known that the same raaga may evoke more than one emotion. Well trained artists are able to highlight a particular rasa by altering the structures of musical presentations such as stressing on specific notes, accents, slurs, gamakas or taans varying in tempo etc. Musicians as well as ardent connoisseurs of music would agree that every single note has the ability to convey an emotion. Many experience a ‘chill’ or ‘shiver down the spine’ when a musician touches certain note or sustains of a note. The meter system is again quite complex. Indian rhythm & metre system is one of the most complex systems compared to other meters used in world music. Film music, which has been influenced by music from all over the world, is much more popular in the current times. Therefore implicit of knowledge of the Western chord system is perhaps present in our population. ICM is chiefly an oral tradition with importance given on memorizing compositions and raaga structures and differences exist in the methods of training even within the two traditional systems of ICM. Semantics of Indian music would differ from that of the western classical music system or other forms of musical system. More often than not music in Indian culture is intimately associated with religious and spiritual practices.

Hypothetically these differences in the musical systems perhaps makes qualitatively different demand on the cognitive functions involved and thereby qualitatively varying degree of involvement of the specialized neural networks implicated in musical processing. Research endeavours are yet to be carried out in this direction.

In India research in the area of Music Cognition is still in its infancy. The effect of Indian classical music and rock music on brain activity (EEG) was studied using Detrended fluctuation analysis (DFA) algorithm, and Multi-scale entropy (MSE) method [55]. Figure 2 gives a detailed description of the methodology employed in DFA analysis. This study concluded that the entropy were high for both the music and the complexity of the EEG increases when the brain processes music. Another work in the linear paradigm [56] analyses the effect of music (carnatic, hard rock and jazz) on brain activity during mental work load using electroencephalography (EEG). EEG signals were acquired while listening to music at three experimental condition (rest, music without mental task and music with mental task) The findings show that while listening to jazz music, the alpha and theta powers were significantly (p<0.05) high for rest as compared to music with and without mental task in Cz. While listening to Carnatic music, the beta power was significantly (p<0.05) high for with mental task as compared to rest and music without mental task at Cz and Fz location. It has been concluded from the study that attention based activities are enhanced while listening to jazz and Carnatic as compared to Hard rock during mental task. Chen et al. [57] monitored the brain wave variation by changing the music type (techno and classical) and the results showed when the music was switched from classical to techno, there was a significant plunge of alpha band and from techno music to classical there was an increase in beta activity Figure 2.

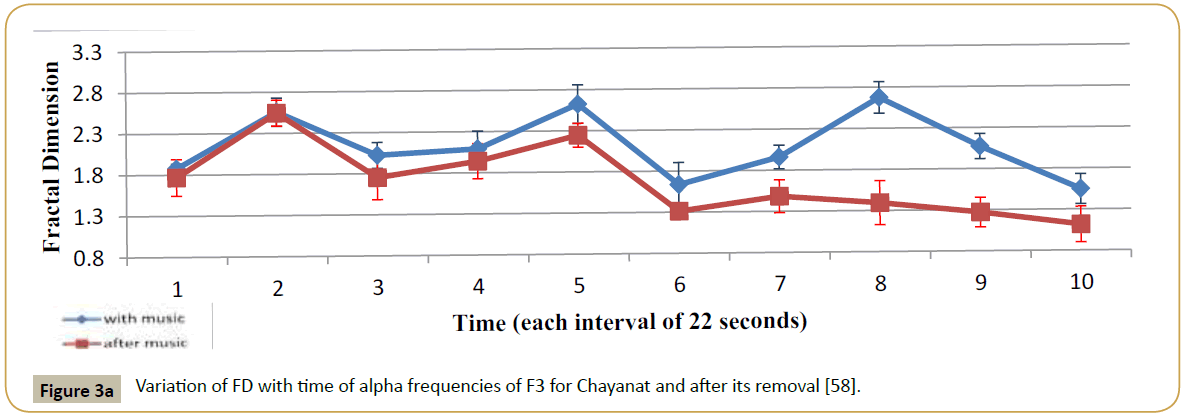

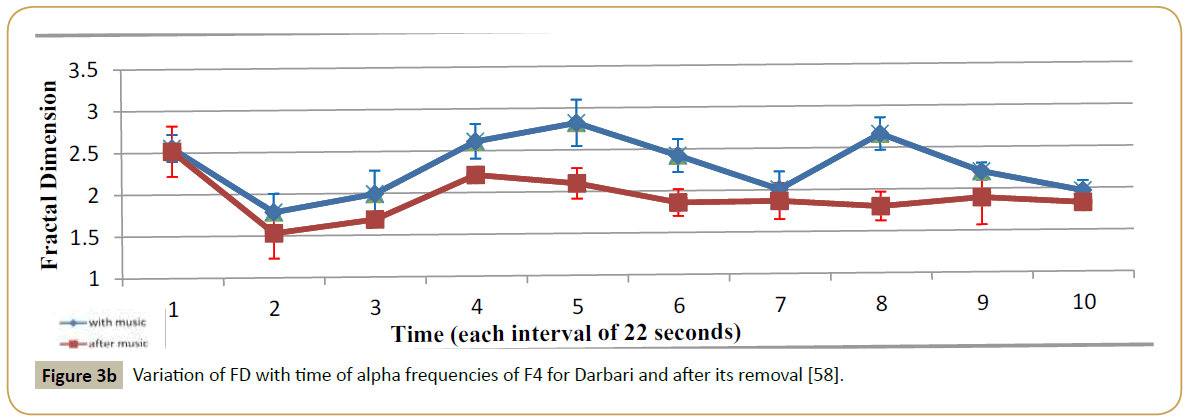

Hysteresis effects in brain were studied using Indian classical music of contrasting emotions [58]. The analysis confirmed the enhancement of arousal based activities during listening to music in both the subjects. Additionally, it was observed that when the music stimuli were removed, significant alpha brain rhythms persisted, showing residual arousal activities. This is analogous to ‘Hysteresis’ where the system retains some ‘memory’ of the former state. Figure 3a and 3b gives a graphical demonstration of the ‘retention’ of musical memory corresponding to alpha frequency rhythm. The use of voids in Indian classical music has been studied in [59,60]. By analyzing these voids along with the notes in the signal and their duration, the characteristic of a particular artist and his style can be obtained. Also the note sequence at the onset of voids is also an important cue for style analysis of an artist as well as the emotional state of the artist Figure 3a, and b [58].

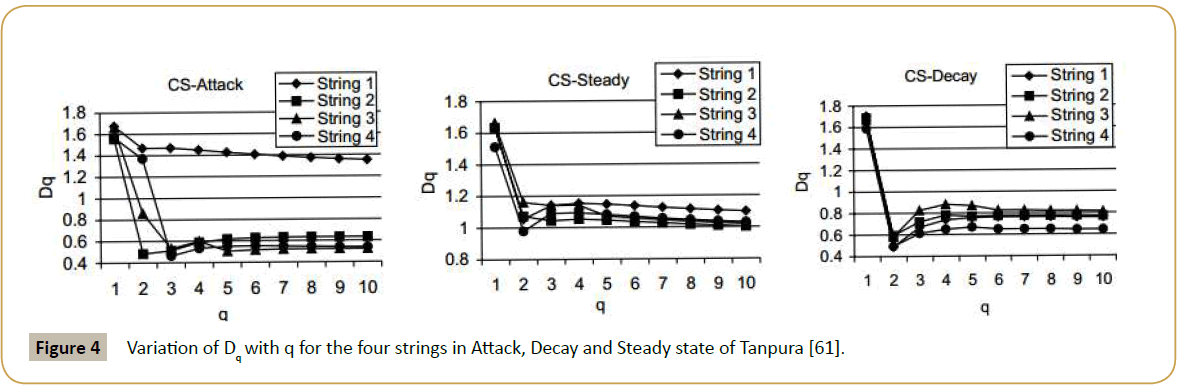

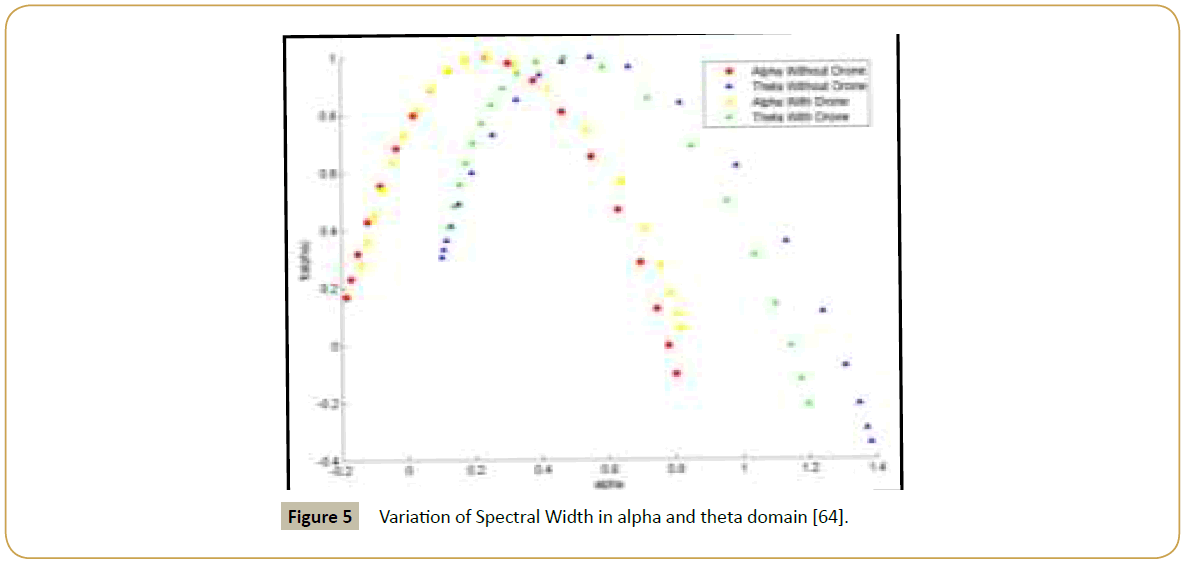

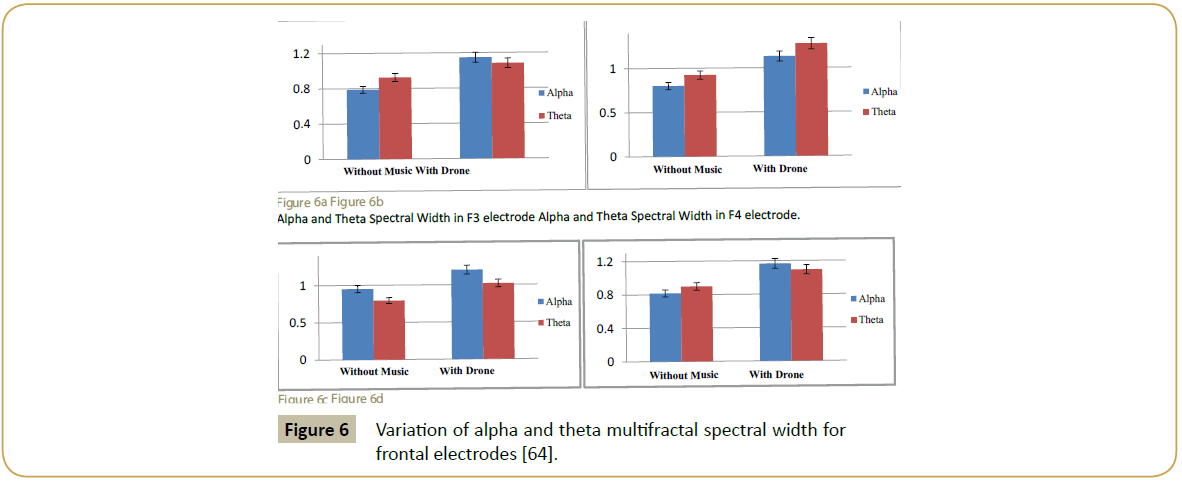

The presence of multifractality in tanpura signals is studied through an examination of relationship between q and Dq and the functional relationship between Dqs [61]. Figure 4 shows graphically the variation of Dq with q corresponding to the four tanpura strings. Braeunig et al. [62] describes a new conceptual framework of using tanpura drone for auditory stimulation in EEG. In a laboratory setting spontaneous brain electrical activity was observed during Tanpura drone stimulation and periods of silence. The brain-electrical response of the subject is analyzed with global descriptors, a way to monitor the course of activation in the time domain in a three-dimensional state space, revealing patterns of global dynamical states of the brain. Fractal technique has been applied to assess change of brain state when subjected to audio stimuli in the form of tanpura drone [63]. The EEG time series has been used to perform this study and the corresponding non-linear waveform of EEG was analyzed with the widely used DFA technique. The investigation clearly indicates that FD which is a very sensitive parameter is capable of distinguishing brain state even with an acoustic signal of simple musical structure. A recent study by the authors report the effect of tanpura drone, and that the multifractality increases uniformly in the alpha and theta frequency regions for all the frontal electrodes [64]. Figure 5 shows the variation of multifractal spectral width for a reference electrode F3 under the effect of tanpura drone, while Figure 6(a-d) demonstrates the cumulative effect for 10 persons in different frontal electrodes [64] Figures 4-6(a-d).

Some excellent and rigorous research work in the area of music was done in the ITC Sangeet Research Academy from 1983 to 2010 [65-76]. Presently cohesive, broad-based and planned research effort in this direction is being spearheaded by Sir C V Raman Centre of Physics and Music, Jadavpur University from 2005 and also sporadic researches by some individuals in various research organizations [77-85]. Some books have been written by the Indian scientists very little experimental research has been carried out till date. Among the Indian scientists Dr. Santala Hegde of Cognitive Psychology Unit, NIMHANS, Bangalore, is carrying out research work in the said area.

Conclusion

The area of music induced emotions using a neuro physical approach involving bio-sensors is relatively new and due to its vast scope and applications in everyday social life, extensive research is going on in different parts of the world to tackle a number of unanswered questions that this domain evokes. Highquality research in the area of Music information retrieval, music psychology (from perception to cognition) and the cognitive neuroscience of music, will be required to know the answer to all of these

• how the auditory and motor systems interact to produce music.

• how people encode and recognize music.

• how music induces emotional reactions.

• how musical experience and training affect brain development.

• how musical training/exposure affects language, cognitive, and social abilities in both children and adults.

References

- Raman,ChandrasekharaVenkata (1921) "On some Indian stringed instruments.”Proceedings of the Indian Association for the Cultivation of Science7: 29-33.

- Raman CV (1935) “The Indian musical drums”, Proc Indian AcadSciA1: 179-188.

- Alicja A Wieczorkowska, Ashoke Kumar Datta, RanjanSengupta,NityanandaDey, Bhaswati Mukherjee (2010) “On Search for Emotion in Hindusthani Vocal Music”, Adv. in Music Inform. Retrieval, SCI 274: 285-304, Springer-Verlag Berlin Heidelberg.

- Mockel M, Rocker L, Stork T (1994)Immediate physiological responses of healthy volunteers to different types of music: cardiovascular, hormonal and mental changes. Eur J ApplPhysiol68: 451-459.

- Mo¨ckel M, Stork T, Vollert J (1995) “Stress reduction through listening to music: effects on stress hormones, hemodynamics and mental state in patients with arterial hypertension and in healthy persons”. Dtsch Med Wochenschr120:745-752.

- Vollert JO, Sto¨rk T, Rose M (2002) “Reception of music in patients with systemic arterial hypertension and coronary artery disease: endocrine changes, hemodynamics and actual mood”. Perfusion15:142-152.

- Gerra G, Zaimovic A, Franchini D (1998)Neuroendocrine responses of healthy volunteers to ‘techno-music’: relationships with personality traits and emotional state. Int J Psychophysiol28:99-111.

- VanderArk SD, Ely D (1992)Biochemical and galvanic skin responses to music stimuli by college students in biology and music. Percept Mot Skills74: 1079-1090.

- Conrad C, Niess H, Jauch K, Bruns CJ, Harti WH, et al. (2007) Overture for growth hormone: requiem for interleukin-6?Crit Care Med35:2709-2713.

- McCraty R, Atkinson M, Rein G, Watkins AD (1996)Music enhances the effect of positive emotional states on salivary IgA. Stress Med12:167-175.

- Suda M, Morimoto K, Obata A, Koizumi H, Maki A (2008)Emotional responses to music: towards scientific perspectives on music therapy. NeuroReport19:75-78.

- Henry, Linda L (1995) "Music therapy: a nursing intervention for the control of pain and anxiety in the ICU: a review of the research literature."Dimensions of critical care nursing14: 295-304.

- Ciccone Marco Matteo (2010) "Feasibility and effectiveness of a disease and care management model in the primary health care system for patients with heart failure and diabetes (Project Leonardo)." Vascular health and risk management 6: 297.

- Ezzone Susan (1998) "Music as an adjunct to antiemetic therapy." Oncology nursing forum 25: 9.

- Migneault Brigitte (2004) "The effect of music on the neurohormonal stress response to surgery under general anesthesia." Anesthesia & Analgesia98: 527-532.

- Seifert U (1993) SystematischeMusiktheorie und Kognitionswissenschaft, Bonn: VerlagfürsystematischeMusikwissenschaft: 69.

- Leman M (1994) ‘Schema-Based Tone Center Recognition of Musical Signals’, Journal of New Music Research, 23: 169-204

- Chow AY, Chow VY, Packo KH, Pollack JS, PeymanGA,et al. (2004)‘The Artificial Silicon Retina Microchip for the Treatment of Vision Loss From Retinitis Pigmentosa’, Archives of Ophthalmology 122: 460-469.

- Markram H (2006) ‘The Blue Brain Project’, Nature Reviews Neuroscience 7: 153-160.

- Shamma S (2001) ‘On the Role of Space and Time in Auditory Processing’, Trends in Cognitive Sciences, 5: 340-348.

- Smith EC and Lewicki MS (2006) ‘Efficient Auditory Coding.’ Nature 439: 978-982.

- Blankertz B, Müller KR, Curio G, Vaughan T, Schalk G, Schlogl A, Neuper C, Pfurtscheller G.

- Hinterberger T, Schroder M, Birbaumer N (2004) ‘The BCI Competition 2003: Progress and Perspectives in Detection and Discrimination of EEG Single Trials’, IEEE Transactions on Biomedical Engineering 51: 1044-1051.

- Eswaran H, Preissl H, Wilson JD, Murphy P, Robinson SE, et al. (2002) ‘ShorttermSerial Magneto encephalography Recordings Offetal Auditory Evoked Responses’, Neuroscience Letters 331: 128-132.

- Stefanics G, Haden G, Huotilainen M, Balazs L, Sziller I, et al. (2007)‘Auditory Temporal Grouping in Newborn Infants’, Psychophysiology, 44: 697-702.

- Koelsch S, SiebelWA (2005) ‘Towards a Neural Basis of Music Perception’, Trends in Cognitive Sciences, 9: 578-584.

- Maess B, Koelsch S, Gunter TC, Friederici AD (2001) ‘Musical Syntax is Processed in Broca’s Area: An MEG Study’Nature Neuroscience 4: 540-545.

- Peretz I, Zatorre R (2005) ‘Brain Organization for Music Processing’, Annual Review of Psychology 56: 89-114.

- Saffran J, Johnson E, Aslin R, Newport E (1999) ‘Statistical Learning of Tone Sequences by Human Infants and Adults’ Cognition 70: 27-52.

- Leibniz GW (1712) ‘Letter to Christian Goldbach in Epistolae Ad DiversosC Kortholt, Leipzig: Breitkopf: 240.

- Prather JF, Peters S, Nowicki S, Mooney R (2008) ‘Precise Auditory-Vocal Mirroring in Neurons for Learned Vocal Communication’ Nature 451: 305-310.

- Lu H, Wang M, Yu H (2005) EEG Model and Location in Brain when Enjoying MusicProceedings of the 27th Annual IEEE Engineering in Medicine and Biology Conference Shanghai:China: 2695-2698.

- Bhattacharya J, Petsche H (2005) Phase synchrony analysis of EEG during music perception reveals changes in functional connectivity due to musical expertise. Signal processing 85:2161-2177.

- WeiChih L, HungWen C,ChienYeh H (2005) Discovering EEG Signals Response to Musical Signal Stimuli by Timefrequency analysis and Independent Component Analysis. Proceedings of the 27th Annual IEEE Engineering in Medicine and Biology Conference Shanghai:China: 2765-2768.

- Lin Yuanpin (2014) "Revealing spatio-spectral electroencephalographic dynamics of musical mode and tempo perception by independent component analysis." Journal of neuroengineering and rehabilitation 11: 18.

- Natarajan,Kannathal (2004) "Nonlinear analysis of EEG signals at different mental states." BioMedical Engineering OnLine 3: 7.

- Gao T (2007) "Detrended fluctuation analysis of the human EEG during listening to emotional music." J Elect Sci Tech Chin 5: 272-277.

- DMantini, MG Perrucci, C Del Gratta, GL Romani, M Corbetta (2007) “Electrophysiological signatures of resting state networks in the human brain,” Proc. Nat. Acad. Sci. USA104:13170-13175.

- JJBAllen, JA Coan, M Nazarian (2004) “Issues and assumptions on the road from raw signals to metrics of frontal EEG asymmetry in emotion,”BiolPsychol 67: 183-218.

- LA Schmidt, LJ Trainor “Frontal brain electrical activity (EEG) distinguishes valence and intensity of musical emotions,” Cognit. Emotion 15: 487-500.

- Field T, Martinez A, Nawrocki T, Pickens J(1998)Music shifts frontal EEG in depressed adolescents. Adolescence 33: 109.

- W Heller (1993) “Neuropsychological mechanisms of individual differences in emotion, personality and arousal,” Neuropsychology7: 476-489.

- MSarlo, G Buodo, S Poli, D Palomba (2005) “Changes in EEG alpha power to different disgust elicitors: The specificity of mutilations,” Neurosci. Lett 382: 291-296.

- LI Aftanas, NVReva, AA Varlamov, SV Pavlov,PMakhnev (2004) “Analysis of evoked EEG synchronization and desynchronization in conditions of emotional activation in humans: Temporal and topographic characteristics,” NeurosciBehavPhysiol 34: 859-867.

- DSammler, MGrigutsch, T Fritz, SKoelsch (2007) “Music and emotion: Electrophysiological correlates of the processing of pleasant and unpleasant music,” Psychophysiology 44: 293-304.

- Bhattacharya J, Petsche H (2001) Enhanced phase synchrony in the electroencephalograph γ band for musicians while listening to music. Physical Review E: 64: 012902.

- EBasar, C BasarEroglu, S Karakas, M Schurmann (1999) Oscillatory brain theory: A new trend in neuroscience—The role of oscillatory processes in sensory and cognitive functions,” IEEE Eng. Med. Biol. Mag 18: 56-66.

- K Ishino and M. Hagiwara (2003) “A feeling estimation system using a simple electroencephalograph,” in Proc IEEE IntConfSystMan Cybern5: 4204–4209.

- K Takahashi (2004) “Remarks on emotion recognition from bio-potential signals,”inProc. 2nd Int. Conf. Auton. Robots Agents: 13-15 pp: 186-191.

- GChanel, JKronegg, DGrandjean, T Pun (2006) “Emotion assessment: Arousal evaluation using EEGs and peripheral physiological signals, Multimedia Content Representation, Classification Secur, 4105pp: 530-537.

- A Heraz, RRazaki, CFrasson (2007) “Using machine learning to predict learner emotional state from brainwaves,” in Proc. 7th IEEE IntConfAdv Learning Technolpp: 853-857.

- G Chanel JJ, M Kierkels, M Soleymani, T Pun (2009) “Short-term emotion assessment in a recall paradigm,” Int J HumanComput Stud 67: 8, pp: 607-627.

- KEKo, HC Yang, KB Sim (2009) “Emotion recognition using EEG signals with relative power values and bayesian network,”Int. J. Control AutomSyst, 7: 5, pp: 865-870.

- Q Zhang and MH Lee (2009)“Analysis of positive and negative emotions in natural scene using brain activity and GIST,”Neurocomputing72: 4–6, pp: 1302-1306.

- KarthickNG, AVIThajudin, PKJoseph (2006) "Music and the EEG: A study using nonlinear methods." Biomedical and Pharmaceutical Engineering. ICBPE 2006. International Conference on IEEE.

- Geethanjali B, K Adalarasu and R Rajsekaran (2012) "Impact of music on brain function during mental task using electroencephalography." World Academy of Science, Engineering and Technology 66: 883-887.

- Chen YC, Wong KW, Kuo DC, Liao TY, Ke DM (2008) Wavelet Real Time Monitoring System: A Case Study of the Musical Influence on Electroencephalography. WSEAS Transactions on Systems 7:pp: 56-62.

- S Sanyal, A Banerjee, T Guhathakurta, R Sengupta, D Ghosh(2013) “EEG study on the neural patterns of Brain with Music Stimuli: An evidence of Hysteresis?”, Proceedings ofthe International Seminar on ‘Creating & Teaching Music Patterns’, Department of Instrumental Music, RabindraBharati University, Kolkata.

- SSanyal, A Banerjee, A Patranabis, K Banerjee, N Dey, et al. (2014) “Voids and their importance in the signals of Hindustani vocal music”, Proceedings of the International Symposium FRSM-2014, All India Institute of Speech and Hearing, Mysore, India.

- SSanyal, A Banerjee, A Patranabis, K Banerjee, N Dey, et al. “Use of Voids in Hindustani Vocal Music: A Cue For Style Recognition”(Communicated to Journal of Music And Meaning)

- R Sengupta, N Dey, AK Datta, D Ghosh (2005) “Assessment of Musical Quality of Tanpura by Fractal- Dimensional Analysis” Fractals 13: 245-252.

- M Braeunig, R Sengupta, APatranabis (2012) “On Tanpura Drone and Brain Electrical Correlates”Lecture Notes in Computer Science 7172, pp. 53-65.

- A Banerjee, SSanyal, R Sengupta, DGhosh (2014) “Fractal Analysis for Assessment of complexity of Electroencephalography Signal due to audio stimuli” Journal of Harmonized Research in Applied Sciences 2:300-310.

- Maity AK, Pratihar R, Mitra A, Dey S, Agrawal V, et al. (2015)MultifractalDetrended Fluctuation Analysis of alpha and theta EEG rhythms with musical stimuli. Chaos, Solitons& Fractals 81: 52-67.

- R Sengupta (1990) “Study on some Aspects of the ‘Singer’s Formant’ in North Indian Classical Singing”, J. of Voice 4: 2, pp: 129.

- BM Banerjee, D Nag(1991)“The Acoustical Character of Sounds from Indian Twin Drums” ACUSTICA (Europe): 75.

- R Sengupta, N Dey, BM Banerjee, D Nag, AK Datta, et al. (1995) “A Comparative Study Between the Spectral Structure of a Composite String Sound and the Thick String of a TanpuraJAcoustSoc India: XXIII.

- R Sengupta, N Dey, BM Banerjee, D Nag, AK Datta, et al. (1996) “Some Studies on Spectral Dynamics of Tanpura Strings with Relation to Perception of Jwari” J. Acoust. Soc.India XXIV.

- R Sengupta, N Dey, D Nag, AK Datta (2001)“Comparative study of Fractal Behaviour in Quasi-Random and Quasi-Periodic Speech Wave Map”, Fractals 9: 403.

- R Sengupta, N Dey, D Nag, A K Datta (2001)“Study on the Relationship of Fractal Dimensions with Random Perturbations in Quasi-Periodic Speech Signal” J AcoustSoc India: 29.

- R Sengupta, N Dey, D Nag, AK Datta (2003) “Acoustic Cues for the Timbral Goodness of Tanpura”, J. Acoust. Soc. India: 31.

- R Sengupta, N Dey, D Nag, A K Datta, SK Parui (2004)“Objective evaluation of Tanpura from the sound signals using spectral features” J. ITC Sangeet Research Academy 18.

- R Sengupta, N Dey,AK Datta, D Ghosh (2005) “Assessment of Musical Quality of Tanpura by Fractal – Dimensional Analysis”, Fractals 13: 245-252.

- AK Datta, R Sengupta, N Dey, D Nag, A Mukerjee (2007) “Study of Melodic Sequences in Hindustani Ragas: A cue for Emotion?”,Proc. Frontiers of Research onSpeech and Music (FRSM-2007) All India Institute of Speech and Hearing, Mysore.

- AK Datta, R Sengupta, N Dey, D Nag(2008)“Study of Non Linearity in Indian Flute by Fractal Dimension Analysis”, Ninaad (J. ITC Sangeet ResearchAcademy):22.

- R Sengupta, N Dey, AK Datta, DGhosh, A Patranabis (2010)“Analysis of the Signal Complexity in Sitar Performances” Fractals 18: 2,pp: 265-270.

- R Sengupta, T Guhathakurta, D Ghosh, AK Datta (2012)“Emotion induced by Hindustani music-A cross-cultural study based on listeners response”, Proceedings of International Symposium FRSM-2012,KIIT College of Engineering, Gurgaon, India.

- APatranabis, K Banerjee, R Sengupta, D Ghosh (2012)“Search for spectral cues of Emotion in Hindustani music”, Proc. ofthe National Symposium on Acoustics-2012 (NSA-2012), KSR Institutefor Engineering and Technology, KSR Kalvi Nagar, Tiruchengode-637215, Tamilnadu.

- R Sengupta, APatranabis, K Banerjee T,Guhathakurta, SSarkar, et al. (2012)“Processing for Emotions induced by Hindustani Instrumental music”,Proc. of the National Symposium on Acoustics-2012 (NSA-2012), KSR Institute for Engineering and Technology, KSR Kalvi Nagar, Tiruchengode-637215, Tamilnadu.

- S Sanyal, A Banerjee, APatranabis, K Banerjee, N Dey, (2014)“Voids and their importance in the signals of Hindustani vocal music”, Proc. of the International Symposium FRSM-2014, AIISH, Mysore, India.

- D Ghosh, R Sengupta, T Guhathakurta, A Banerjee, S Sanyal (2013)“Neuro-Cognitive Physics approach using Bio-Sensors (EEG) to investigate raga induced emotion recognition process in the Brain” Proc. of the West Bengal State Science and Technology Congress, Bengal Engineering and Science University, Sibpur, Howrah.

- A Banerjee, S Sanyal, T Guhathakurta, A Patranabis, K Banerjee (2014) “Evidence of hysteresis from the study of nonlinear dynamics of brain with music stimuli” Proceedings of the International Symposium FRSM-2014, All India Institute of Speech and Hearing, Mysore, India.

- A Patranabis, S Sanyal, A Banerjee, K Banerjee, T Guhathakurta, N. Dey, R. Sengupta and D. Ghosh“Measurement of emotion induced by Hindustani Music –A human response and EEG study”, Ninaad (J. ITC Sangeet Res Acad.) 26-27.

- A Patranabis, K Banerjee, RSengupta, DGhosh“Objective Analysis of the Timbral quality of Sitars having Structural change over Time”,Ninaad (J ITC Sangeet Research Academy), 25:pp: 1-7.

- A Patranabis, R Sengupta, T Guhathakurta, A Deb, D Ghosh (2011)”Processing of Tabla strokes by Wavelet analysis”,Proceedings of National Symposium on Acoustics, Acoustic Waves, Shree Publishers and Distributors, N Delhi-110002, pp: 101-112.

Open Access Journals

- Aquaculture & Veterinary Science

- Chemistry & Chemical Sciences

- Clinical Sciences

- Engineering

- General Science

- Genetics & Molecular Biology

- Health Care & Nursing

- Immunology & Microbiology

- Materials Science

- Mathematics & Physics

- Medical Sciences

- Neurology & Psychiatry

- Oncology & Cancer Science

- Pharmaceutical Sciences